Heads up displays on smart glasses? Haven't we already been here before?

This week I'm feeling crunched and a tad stressed. I'm relieved we didn't have any significant news in the Android ecosystem and that Meta took the stage to fluster through its own failed technology demonstration instead. I'm also having deja vu. (So did The Verge's Sean Hollister, but I swear I didn't realize we were writing the same thing until after I'd come up with the thesis. Clearly, everyone's in the same line of thinking!)

Meta's developer conference was this week. I went last year, and my experience left me feeling like I was held hostage for an entire day of mediocre AI tech demos. It seemed more of the same this year, though the hardware improved. They had the announcing keynote in the evening, where they introduced the Meta Ray-Ban Display, controlled by the Meta Neural Band on your wrist, the second-generation Ray-Ban Metas, and the Oakley Meta Vanguard, designed for performance athletes who need to dive underwater with their surveillance goggles. There is some fantastic coverage of these new products across YouTube and tech blogs that you should check out over the weekend.

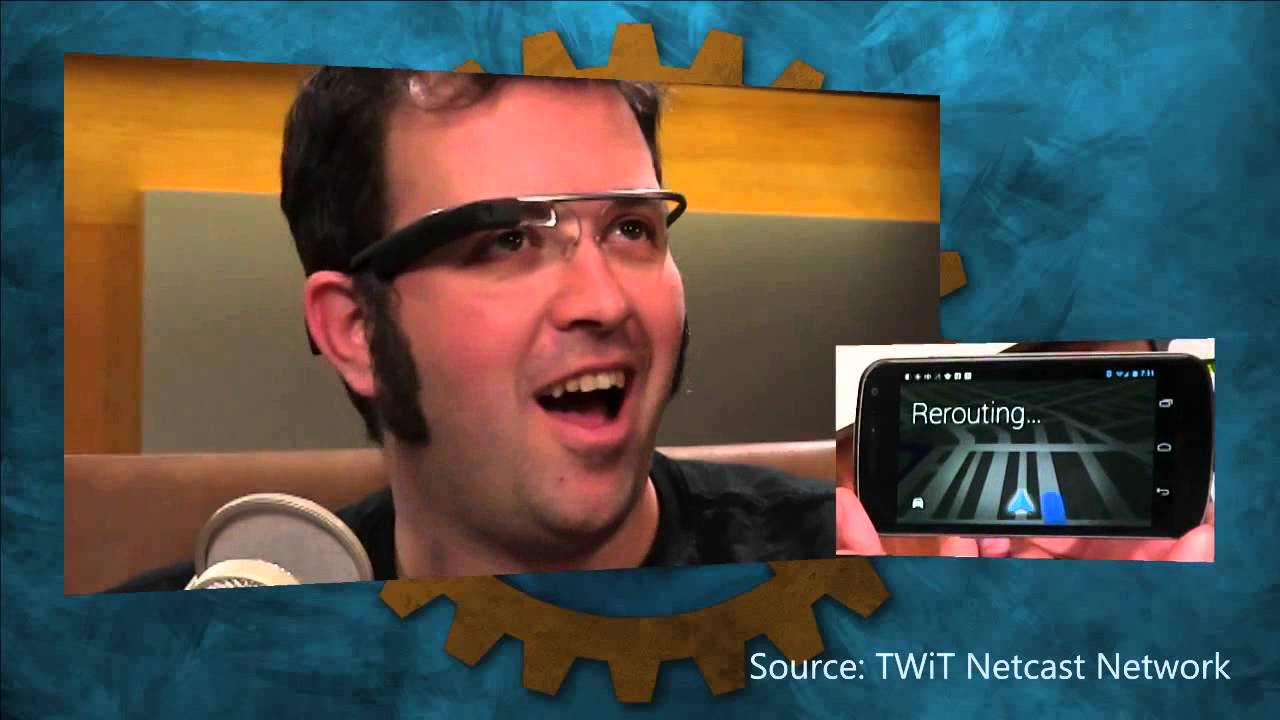

I can't help but feel like I've been here already and have done this. It's all so very repetitive. I've been in this business since physical paper magazines were a thing that gave you papercuts and disintegrated in the rain. When I finally got into online journalism—it was once a niche—the buzz was around a pair of smart glasses made by Google, especially after Sergey Brin jumped through the ceiling of the Moscone Center wearing them. Google Glass ran a custom, modified version of Android, known as Glass OS (now referred to as Google XE), and promised hands-free, heads-up computing. It elicited an incredible amount of polarizing reactions. People got punched in San Francisco for wearing them out to the bars, while others lauded their ability to capture life's essence hands-free. They definitely wowed Ron in the early days of All About Android (RIP).

And then they petered out. The technology was eventually refocused, a decade later, for the enterprise sector, which made sense given the unobtrusive heads-up display.

I'm not buying it again. I have the first-gen Ray Ban Meta glasses that I try to use once in a while to stay "with it" on what's happening on Meta's side of the bay. They're great for capturing first-person footage of activities like hiking and spending time with kids. Also, you can ambiently play music through them while still being able to hear around you. But I feel like a psyop when I wear them out in crowded public, like a pariah who should be wearing a sign letting people know that I have an internet-connected camera on my face.

None of this seems any more "okay" than it was when Google Glass was the one driving headlines. It's redundant, even if this generation's smart glasses are more stylish than those of the 2010s. Maybe the real problem is that I'm growing older and feeling the cycle of things. Or, is it a deeper dread brought on by knowing this cycle's final destination is a world where we all wear cameras while being watched by them?

Thank a diety we can finally erase that fence! Why didn't Google bother to marquee this feature is beyond us. Mishaal, Jason, and me also discuss our dread over Android's open roots eroding before our very eyes.